Metal³

The Metal³ project (pronounced: “Metal Kubed”) provides components for bare metal host management with Kubernetes. You can enrol your bare metal machines, provision operating system images, and then, if you like, deploy Kubernetes clusters to them. From there, operating and upgrading your Kubernetes clusters can be handled by Metal³. Moreover, Metal³ is itself a Kubernetes application, so it runs on Kubernetes, and uses Kubernetes resources and APIs as its interface.

Metal³ is one of the providers for the Kubernetes sub-project Cluster API. Cluster API provides infrastructure agnostic Kubernetes lifecycle management, and Metal³ brings the bare metal implementation.

This is paired with one of the components from the OpenStack ecosystem, Ironic for booting and installing machines. Metal³ handles the installation of Ironic as a standalone component (there’s no need to bring along the rest of OpenStack). Ironic is supported by a mature community of hardware vendors and supports a wide range of bare metal management protocols which are continuously tested on a variety of hardware. Backed by Ironic, Metal³ can provision machines, no matter the brand of hardware.

In summary, you can write Kubernetes manifests representing your hardware and your desired Kubernetes cluster layout. Then Metal³ can:

- Discover your hardware inventory

- Configure BIOS and RAID settings on your hosts

- Optionally clean a host’s disks as part of provisioning

- Install and boot an operating system image of your choice

- Deploy Kubernetes

- Upgrade Kubernetes or the operating system in your clusters with a non-disruptive rolling strategy

- Automatically remediate failed nodes by rebooting them and removing them from the cluster if necessary

You can even deploy Metal³ to your clusters so that they can manage other clusters using Metal³…

Metal³ is open-source and welcomes community contributions. The community meets at the following venues:

- #cluster-api-baremetal on Kubernetes Slack

- Metal³ development mailing list

- From the mailing list, you’ll also be able to find the details of a weekly Zoom community call on Wednesdays at 14:00 GMT

About this guide

This user guide aims to explain the Metal³ feature set, and provide how-tos for using Metal³. It’s not a tutorial (for that, see the Getting Started Guide). Nor is it a reference (for that, see the API Reference Documentation, and of course, the code itself.)

Project overview

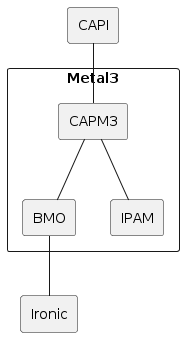

Metal3 consists of multiple sub-projects. The most notable are Bare Metal Operator, Cluster API provider Metal3 and the IP address manager. There is no requirement to use all of them.

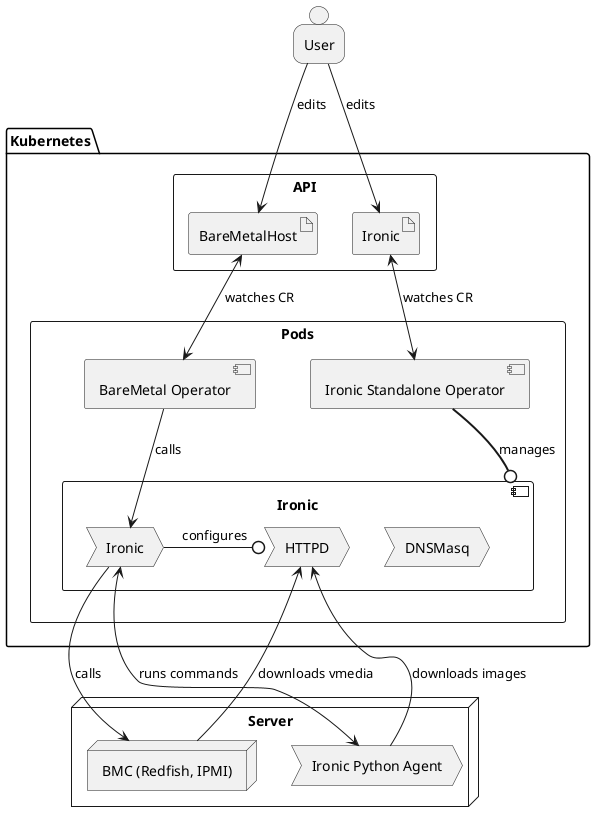

The stack, when including Cluster API and Ironic, looks like this:

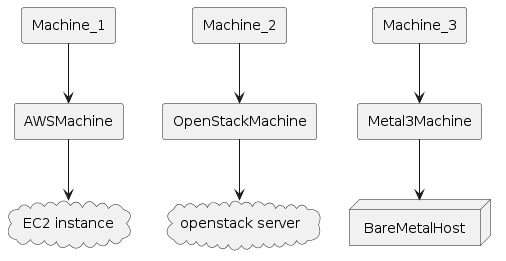

From a user perspective it may be more useful to visualize the Kubernetes resources. When using Cluster API, Metal3 works as any other infrastructure provider. The Machines get corresponding Metal3Machines, which in turn reference the BareMetalHosts.

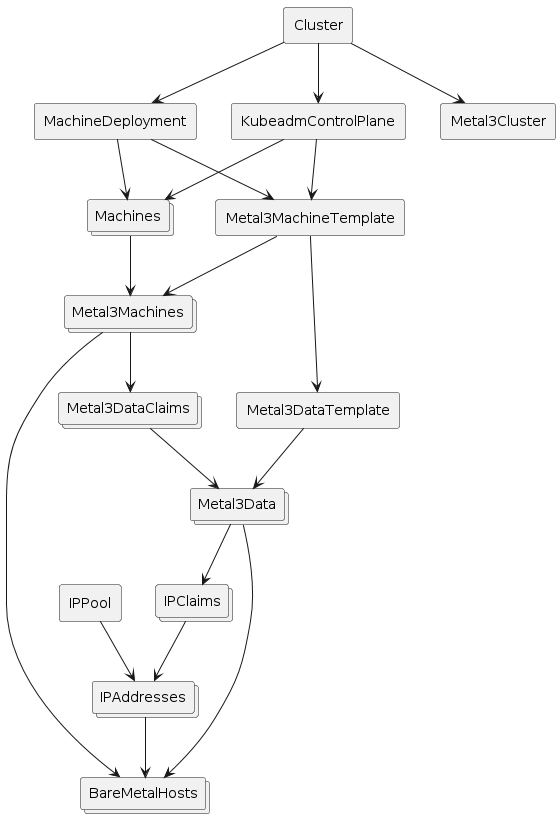

The following diagram shows more details about the Metal3 objects. Note that it is not showing everything and is meant just as an overview.

How does it work?

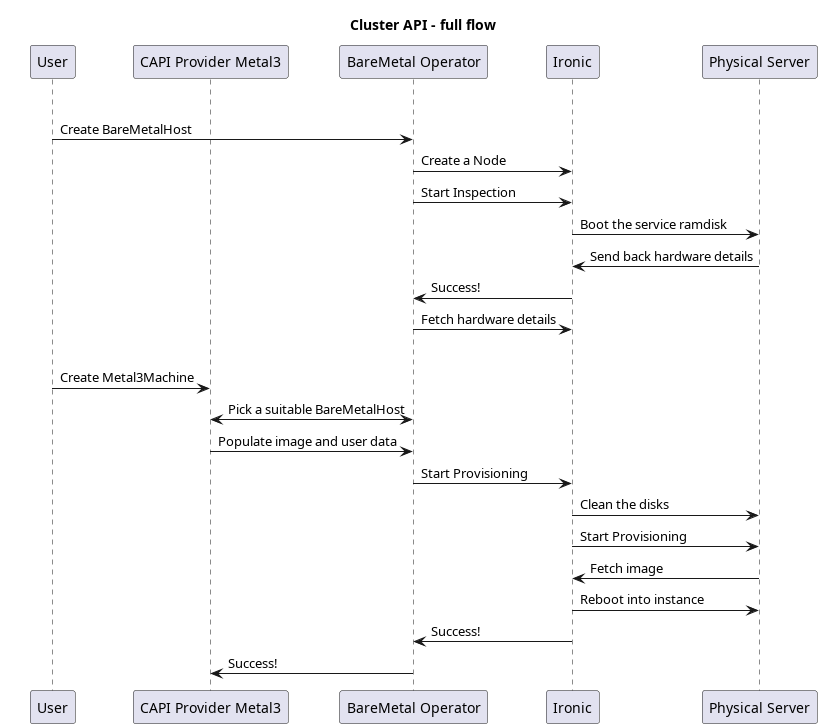

Metal3 relies on Ironic for interacting with the physical machines. Ironic in turn communicates with Baseboard Management Controllers (BMCs) to manage the machines. Ironic can communicate with the BMCs using protocols such as Redfish, IPMI, or iDRAC. In this way, it can power on or off the machines, change the boot device, and so on. For more information, see Ironic in Metal3

For more advanced operations, like writing an image to the disk, the Ironic Python Agent (IPA) is first booted on the machine. Ironic can then communicate with the IPA to perform the requested operation.

The BareMetal Operator (BMO) is a Kubernetes controller that exposes parts of Ironic’s capabilities through the Kubernetes API. This is essentially done through the BareMetalHost custom resource.

The Cluster API infrastructure provider for Metal3 (CAPM3) provides the necessary functionality to make Metal3 work with Cluster API. This means that Cluster API can be used to provision bare metal hosts into workload clusters. Similar to other infrastructure providers, CAPM3 adds custom resources such as Metal3Cluster and Metal3MachineTemplate in order to implement the Cluster API contract.

A notable addition to the contract is the management of metadata through Metal3DataTemplates and related objects. Users can provide metadata and network data through these objects. For network data specifically, it is worth mentioning the Metal3 IP address manager (IPAM) that can be used to assign IP addresses to the hosts.

Requirements

- Server(s) with baseboard management capabilities (i.e. Redfish, iDRAC, IPMI, etc.). For development you can use virtual machines with Sushy-tools. More information here.

- An Ironic instance. More information here.

- A Kubernetes cluster (the management cluster) where the user stores and manages the Metal3 resources. A kind cluster is enough for bootstrapping or development.

Quick-start for Metal3

This guide has been tested on Ubuntu server 24.04. It should be seen as an example rather than the absolute truth about how to deploy and use Metal3. We will cover two environments and two scenarios. The environments are

- a baremetal lab with actual physical servers and baseboard management controllers (BMCs), and

- a virtualized baremetal lab with virtual machines and sushy-tools acting as BMC.

In both of these, we will show how to use Bare Metal Operator and Ironic to manage the servers through a Kubernetes API, as well as how to turn the servers into Kubernetes clusters managed through Cluster API. These are the two scenarios.

In a nut-shell, this is what we will do:

- Quick-start for Metal3 - Prerequisites - Baremetal lab configuration - Virtualized configuration - Common setup - Image server - DHCP server - Management cluster - Create BareMetalHosts - (Scenario 1) Provision BareMetalHosts - (Scenario 2) Metal3 and Cluster API - Cleanup

Prerequisites

You will need the following tools installed.

- docker (or podman)

- kind or minikube (management cluster, not needed if you already have a “real” cluster that you want to use)

- clusterctl

- kubectl

- htpasswd

- virsh and virt-install for the virtualized setup

Baremetal lab configuration

The baremetal lab has two servers that we will call bml-01 and bml-02, as well

as a management computer where we will set up Metal3. The servers are equipped

with iLO 4 BMCs. These BMCs are connected to an “out of band” network

(192.168.1.0/24) and they have the following IP addresses.

- bml-01: 192.168.1.28

- bml-02: 192.168.1.14

There is a separate network for the servers (192.168.0.0/24). The management

computer is connected to both of these networks with IP addresses 192.168.1.7

and 192.168.0.150 respectively.

Finally, we will need the MAC addresses of the servers to keep track of which is which.

- bml-01: 9C:63:C0:AC:10:42

- bml-02: 80:c1:6e:7a:5a:a8

Virtualized configuration

If you do not have the hardware or perhaps just want to test things out without committing to a full baremetal lab, you may simulate it with virtual machines. In this section we will show how to create a virtual machine and use sushy-tools as a baseboard management controller for it.

The configuration is a bit simpler than in the baremetal lab because we don’t have a separate out of band network here. In the end we will have the BMC available as

- bml-vm-01: 192.168.222.1:8000/redfish/v1/Systems/bmh-vm-01

and the MAC address:

- bml-vm-01: 00:60:2f:31:81:01

Start by defining a libvirt network:

<network>

<name>baremetal-e2e</name>

<forward mode='nat'>

<nat>

<port start='1024' end='65535'/>

</nat>

</forward>

<bridge name='metal3'/>

<ip address='192.168.222.1' netmask='255.255.255.0'>

<dhcp>

<range start='192.168.222.3' end='192.168.222.99'/>

<!-- Reserve IP for convenience -->

<host mac='00:60:2f:31:81:01' name='bmh-vm-01' ip='192.168.222.101'/>

<bootp file='http://192.168.222.2:6180/boot.ipxe'/>

</dhcp>

</ip>

</network>

Save this as net.xml.

Metal3 relies on baseboard management controllers to manage the baremetal servers, so we need something similar for our virtual machines. This comes in the form of sushy-tools.

We need to create a configuration file for sushy-tools:

# Listen on the local IP address 192.168.222.1

SUSHY_EMULATOR_LISTEN_IP = u'192.168.222.1'

# Bind to TCP port 8000

SUSHY_EMULATOR_LISTEN_PORT = 8000

# Serve this SSL certificate to the clients

SUSHY_EMULATOR_SSL_CERT = None

# If SSL certificate is being served, this is its RSA private key

SUSHY_EMULATOR_SSL_KEY = None

# The OpenStack cloud ID to use. This option enables OpenStack driver.

SUSHY_EMULATOR_OS_CLOUD = None

# The libvirt URI to use. This option enables libvirt driver.

SUSHY_EMULATOR_LIBVIRT_URI = u'qemu:///system'

# Instruct the libvirt driver to ignore any instructions to

# set the boot device. Allowing the UEFI firmware to instead

# rely on the EFI Boot Manager

# Note: This sets the legacy boot element to dev="fd"

# and relies on the floppy not existing, it likely won't work

# if your VM has a floppy drive.

SUSHY_EMULATOR_IGNORE_BOOT_DEVICE = False

# The map of firmware loaders dependent on the boot mode and

# system architecture. Ideally the x86_64 loader will be capable

# of secure boot or not based on the chosen nvram.

SUSHY_EMULATOR_BOOT_LOADER_MAP = {

u'UEFI': {

u'x86_64': u'/usr/share/OVMF/OVMF_CODE.secboot.fd'

},

u'Legacy': {

u'x86_64': None

}

}

Finally, we start up the virtual baremetal lab and create VMs to simulate the servers. Feel free to adjust things as you see fit, but make sure to note the MAC address. That will be needed later. You can choose how many VMs to create. At least one is needed, although more could be nice for scenario 2, to have more than one node in the cluster.

#!/usr/bin/env bash

# Define and start the baremetal-e2e network

virsh -c qemu:///system net-define net.xml

virsh -c qemu:///system net-start baremetal-e2e

# We need to create veth pair to connect the baremetal-e2e net (defined above)

# and the docker network used by kind. This is to allow controllers in

# the kind cluster to communicate with the VMs and vice versa.

# For example, Ironic needs to communicate with IPA.

# These options are the same as what kind creates by default,

# except that we hard code the IPv6 subnet and specify a bridge name.

#

# NOTE! If you used kind before, you already have this network but

# without the fixed bridge name. Please remove it first in that case!

# docker network rm kind

docker network create -d=bridge \

-o com.docker.network.bridge.enable_ip_masquerade=true \

-o com.docker.network.driver.mtu=1500 \

-o com.docker.network.bridge.name="kind" \

--ipv6 --subnet "fc00:f853:ccd:e793::/64" \

kind

# Next create the veth pair

sudo ip link add metalend type veth peer name kindend

sudo ip link set metalend master metal3

sudo ip link set kindend master kind

sudo ip link set metalend up

sudo ip link set kindend up

# Then we need to set routing rules as well

sudo iptables -I FORWARD -i kind -o metal3 -j ACCEPT

sudo iptables -I FORWARD -i metal3 -o kind -j ACCEPT

# Start the sushy-emulator container that acts as BMC

docker run --name sushy-tools --rm --network host -d \

-v /var/run/libvirt:/var/run/libvirt \

-v "$(pwd)/sushy-emulator.conf:/etc/sushy/sushy-emulator.conf" \

-e SUSHY_EMULATOR_CONFIG=/etc/sushy/sushy-emulator.conf \

quay.io/metal3-io/sushy-tools:latest sushy-emulator

# Generate a VM definition xml file and then define the VM

# use --ram=8192 for Scenario 2

virt-install \

--connect qemu:///system \

--name bmh-vm-01 \

--description "Virtualized BareMetalHost" \

--osinfo=ubuntu-lts-latest \

--ram=4096 \

--vcpus=2 \

--disk size=25 \

--boot uefi,hd,network \

--import \

--network network=baremetal-e2e,mac="00:60:2f:31:81:01" \

--noautoconsole \

--print-xml > bmh-vm-01.xml

virsh define bmh-vm-01.xml

rm bmh-vm-01.xml

Common setup

This section is common for both the baremetal configuration and the virtualized environment. Specific configuration will always differ between environments though. We will go through how to configure and deploy Ironic and Baremetal Operator.

Image server

In order to do anything useful, we will need a server for hosting disk images that can be used to provision the servers. In this guide, we will use an nginx container for this. We also download some images that will be used later.

#!/usr/bin/env bash

mkdir disk-images

pushd disk-images || exit

wget https://cloud-images.ubuntu.com/jammy/current/jammy-server-cloudimg-amd64.img

wget https://cloud-images.ubuntu.com/jammy/current/SHA256SUMS

sha256sum --ignore-missing -c SHA256SUMS

wget https://artifactory.nordix.org/artifactory/metal3/images/k8s_v1.34.1/CENTOS_10_NODE_IMAGE_K8S_v1.34.1.qcow2

sha256sum CENTOS_10_NODE_IMAGE_K8S_v1.34.1.qcow2

# Convert to raw.

# This helps lower memory requirements, since the raw image can be streamed to disk

# instead of first loaded to memory by IPA for conversion.

qemu-img convert -f qcow2 -O raw CENTOS_10_NODE_IMAGE_K8S_v1.34.1.qcow2 CENTOS_10_NODE_IMAGE_K8S_v1.34.1.raw

# Local cache of IPA

wget https://tarballs.opendev.org/openstack/ironic-python-agent/dib/ipa-centos9-master.tar.gz

popd || exit

docker run --name image-server --rm -d -p 80:8080 \

-v "$(pwd)/disk-images:/usr/share/nginx/html" nginxinc/nginx-unprivileged

DHCP server

The BareMetalHosts must be able to call back to Ironic when going through the inspection phase. This means that they must have IP addresses in a network where they can reach Ironic. Any DHCP server can be used for this. We use the Ironic container image that includes dnsmasq. It is deployed automatically together with Ironic.

Management cluster

If you already have a Kubernetes cluster that you want to use, go ahead and use that. Please ensure that it is connected to the relevant networks so that Ironic can reach the BMCs and so that the BareMetalHosts can reach Ironic.

If you do not have a cluster already, you can create one using kind. Please note that this is absolutely not intended for production environments.

We will use the following configuration file for kind, save it as kind.yaml:

apiVersion: kind.x-k8s.io/v1alpha4

kind: Cluster

nodes:

- role: control-plane

# Open ports for Ironic

extraPortMappings:

# Ironic httpd

- containerPort: 6180

hostPort: 6180

listenAddress: "0.0.0.0"

protocol: TCP

# Ironic API

- containerPort: 6385

hostPort: 6385

listenAddress: "0.0.0.0"

protocol: TCP

As you can see, it has a few ports forwarded from the host. This is to make Ironic reachable when it is running inside the kind cluster.

We will also need to install cert-manager and Ironic Standalone Operator. Finally, we deploy Ironic and Bare Metal Operator.

#!/usr/bin/env bash

kind create cluster --config kind.yaml

# (Optional) Initialize CAPM3. This is only needed for scenario 2, but it also installs

# cert-manager, which is needed for pretty much everything else.

# If you skip this, make sure you install cert-manager separately!

clusterctl init --infrastructure=metal3 --ipam=metal3

kubectl apply -f https://github.com/metal3-io/ironic-standalone-operator/releases/latest/download/install.yaml

kubectl -n ironic-standalone-operator-system wait --for=condition=Available deploy/ironic-standalone-operator-controller-manager

# Now we can deploy Ironic and BMO

kubectl create ns baremetal-operator-system

# Apply Ironic with retry logic (up to 5 attempts with 10 second delays).

# The IrSO webhook is not guaranteed to be ready when the IrSO deployment is,

# so some retries may be needed.

MAX_RETRIES=5

RETRY_DELAY=10

RETRY_COUNT=0

echo "Applying Ironic configuration..."

while [[ "${RETRY_COUNT}" -lt "${MAX_RETRIES}" ]]; do

if kubectl apply -k ironic; then

echo "Successfully applied Ironic configuration"

break

else

RETRY_COUNT=$((RETRY_COUNT + 1))

echo "Failed to apply Ironic configuration. Retrying in ${RETRY_DELAY} seconds... (Attempt ${RETRY_COUNT}/${MAX_RETRIES})"

sleep ${RETRY_DELAY}

fi

done

if [[ "${RETRY_COUNT}" -eq "${MAX_RETRIES}" ]]; then

echo "ERROR: Failed to apply Ironic configuration after ${MAX_RETRIES} attempts. Exiting."

exit 1

fi

kubectl apply -k bmo

We use the following manifest to deploy Ironic. Feel free to adjust as needed for your environment.

# kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: baremetal-operator-system

resources:

- ironic.yaml

- certificate.yaml

# ironic.yaml

apiVersion: ironic.metal3.io/v1alpha1

kind: Ironic

metadata:

name: ironic

namespace: baremetal-operator-system

spec:

networking:

dhcp:

rangeBegin: "192.168.222.100"

rangeEnd: "192.168.222.200"

networkCIDR: "192.168.222.0/24"

interface: "eth0"

ipAddress: "192.168.222.2"

ipAddressManager: "keepalived"

tls:

certificateName: ironic-cert

# certificate.yaml

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: selfsigned-issuer

namespace: baremetal-operator-system

spec:

selfSigned: {}

---

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: ironic-cacert

namespace: baremetal-operator-system

spec:

commonName: ironic-ca

isCA: true

issuerRef:

kind: Issuer

name: selfsigned-issuer

secretName: ironic-cacert

---

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: ca-issuer

namespace: baremetal-operator-system

spec:

ca:

secretName: ironic-cacert

---

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: ironic-cert

namespace: baremetal-operator-system

spec:

ipAddresses:

- 192.168.222.2

dnsNames:

- ironic.baremetal-operator-system.svc

issuerRef:

kind: Issuer

name: ca-issuer

secretName: ironic-cert

For the Bare Metal Operator, we use a kustomization that looks like this:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: baremetal-operator-system

# This is the kustomization that we build on. You can download it and change

# the URL to a relative path if you do not want to access it over the network.

# Note that the ref=main specifies the version to use.

# We use main here simply because the integration with IrSO is not included in a release yet.

resources:

- https://github.com/metal3-io/baremetal-operator/config/use-irso?ref=main

Create BareMetalHosts

Now that we have Bare Metal Operator deployed, let’s put it to use by creating BareMetalHosts (BMHs) to represent our servers. You will need the protocol and IPs of the BMCs, as well as credentials for accessing them, and the servers MAC addresses.

Create one secret for each BareMetalHost, containing the credentials for accessing its BMC. No credentials are needed in the virtualized setup but you still need to create the secret with some values. Here is an example:

apiVersion: v1

kind: Secret

metadata:

name: bml-01

type: Opaque

stringData:

username: replaceme

password: replaceme

Then continue by creating the BareMetalHost manifest. You can put it in the same

file as the secret if you want. Just remember to separate the two resources with

one line containing ---.

Here is an example of a BareMetalHost referencing the secret above with MAC

address and BMC address matching our bml-01 server (see supported

hardware for information on BMC addressing).

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: bml-01

spec:

online: true

bootMACAddress: 9C:63:C0:AC:10:42

bootMode: UEFI

bmc:

address: idrac-virtualmedia://192.168.1.28

credentialsName: bml-01

disableCertificateVerification: true

Here is the same for the virtualized BareMetalHost:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: bml-vm-01

spec:

online: true

bootMACAddress: 00:60:2f:31:81:01

bootMode: UEFI

hardwareProfile: libvirt

bmc:

address: redfish-virtualmedia+http://192.168.222.1:8000/redfish/v1/Systems/bmh-vm-01

credentialsName: bml-01

Apply these in the cluster with kubectl apply -f path/to/file.

You should now be able to see the BareMetalHost go through registering and

inspecting phases before it finally becomes available. Check with

kubectl get bmh. The output should look similar to this:

NAME STATE CONSUMER ONLINE ERROR AGE

bml-01 available true 26m

(Scenario 1) Provision BareMetalHosts

If you want to manage the BareMetalHosts directly, keep reading. If you would rather use Cluster API to make Kubernetes clusters out of them, skip to the next section.

Edit the BareMetalHost to add details of what image you want to provision it with. For example:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: bml-vm-01

spec:

online: true

bootMACAddress: 00:60:2f:31:81:01

bootMode: UEFI

hardwareProfile: libvirt

bmc:

address: redfish-virtualmedia+http://192.168.222.1:8000/redfish/v1/Systems/bmh-vm-01

credentialsName: bml-01

image:

checksumType: sha256

checksum: http://192.168.222.1/SHA256SUMS

format: qcow2

url: http://192.168.222.1/jammy-server-cloudimg-amd64.img

Note that the URL for the disk image is not using the out of band network.

Image provisioning works so that the Ironic Python Agent is first booted on the

machine. From there (i.e. not in the out of band network) it downloads the disk

image and writes it to disk. If the machine has several disks, and you want to

specify which one to use, set rootDeviceHints

(otherwise, /dev/sda is used by default).

The manifest above is enough to provision the BareMetalHost, but unless you have everything you need already baked in the disk image, you will most likely want to add some user-data and network-data. We will show here how to configure authorized ssh keys using user-data (see instance customization for more details).

First, we create a file (user-data.yaml) with the user-data:

#cloud-config

users:

- name: user

ssh_authorized_keys:

- ssh-ed25519 ABCD... user@example.com

Then create a secret from it.

kubectl create secret generic user-data --from-file=value=user-data.yaml --from-literal=format=cloud-config

Add the following to the BareMetalHost manifest to make it use the user-data:

spec:

...

userData:

name: user-data

namespace: default

Apply the changes with kubectl apply -f path/to/file. You should now see the

BareMetalHost go into provisioning and eventually become provisioned.

NAME STATE CONSUMER ONLINE ERROR AGE

bml-01 provisioned true 2h

You can now check the logs of the DHCP server to see what IP the BareMetalHost

got (docker logs dnsmasq) and try to ssh to it.

(Scenario 2) Metal3 and Cluster API

If you want to turn the BareMetalHosts into Kubernetes clusters, you should consider using Cluster API and the infrastructure provider for Metal3. In this section we will show how to do it.

Initialize the Cluster API core components and the infrastructure provider for Metal3 (if you didn’t already do it):

clusterctl init --infrastructure metal3 --ipam=metal3

Now we need to set some environment variables that will be used to render the manifests from the cluster template. Most of them are related to the disk image that we downloaded above.

Note: There are many ways to configure and expose the API endpoint of the cluster. You need to decide how to do it. It will not “just work”. Here are some options:

- Configure a specific IP for the control-plane server through the DHCP server. This is doesn’t require anything extra but it is also very limited. You will not be able to upgrade the cluster for example.

- Set up a load balancer separately and use that as API endpoint.

- Use keepalived or kube-vip or similar to assign a VIP to one of the control-plane nodes.

# Baremetal lab image variables

# export IMAGE_URL="http://192.168.0.150/CENTOS_10_NODE_IMAGE_K8S_v1.34.1.qcow2"

# export IMAGE_CHECKSUM="afa7e95ee6fb92b952ab85bae4d01033651e690cf04a626c668041d7b94ddd4a"

# export IMAGE_FORMAT="qcow2"

# Virtualized setup variables

export IMAGE_URL="http://192.168.222.1/CENTOS_10_NODE_IMAGE_K8S_v1.34.1.raw"

export IMAGE_CHECKSUM="20537529c0588e1c3d1929981207ef6fac73df7b2500b84f462d09badcc670ea"

export IMAGE_FORMAT="raw"

# Common variables

export IMAGE_CHECKSUM_TYPE="sha256"

export KUBERNETES_VERSION="v1.34.1"

# Make sure this does not conflict with other networks

export POD_CIDR='["192.168.10.0/24"]'

# These can be used to add user-data

export CTLPLANE_KUBEADM_EXTRA_CONFIG="

preKubeadmCommands:

- systemctl enable --now crio

users:

- name: user

sshAuthorizedKeys:

- ssh-ed25519 ABCD... user@example.com"

export WORKERS_KUBEADM_EXTRA_CONFIG="

preKubeadmCommands:

- systemctl enable --now crio

users:

- name: user

sshAuthorizedKeys:

- ssh-ed25519 ABCD... user@example.com"

# NOTE! You must ensure that this is forwarded or assigned somehow to the

# server(s) that is selected for the control-plane.

# We reserved this address in the net.xml as a basic way to get a fixed IP.

export CLUSTER_APIENDPOINT_HOST="192.168.222.101"

export CLUSTER_APIENDPOINT_PORT="6443"

With the variables in place, we can render the manifests and apply:

clusterctl generate cluster my-cluster --control-plane-machine-count 1 --worker-machine-count 0 | kubectl apply -f -

You should see BareMetalHosts be provisioned as they are “consumed” by the Metal3Machines:

NAME STATE CONSUMER ONLINE ERROR AGE

bml-02 provisioned my-cluster-controlplane-8z46n true 68m

If all goes well and the API endpoint is correctly configured, you should eventually get a working cluster. Note that it will not become fully healthy until a CNI is deployed.

Deploy Calico as CNI:

clusterctl get kubeconfig my-cluster > kubeconfig.yaml

kubectl --kubeconfig=kubeconfig.yaml apply --server-side -f https://raw.githubusercontent.com/projectcalico/calico/v3.31.0/manifests/calico.yaml

Check cluster health with clusterctl describe cluster my-cluster:

NAME REPLICAS AVAILABLE READY UP TO DATE STATUS REASON SINCE MESSAGE

Cluster/my-cluster 1/1 1 1 1 Available: True Available 48s

├─ClusterInfrastructure - Metal3Cluster/my-cluster Ready: True NoReasonReported 32m

└─ControlPlane - KubeadmControlPlane/my-cluster 1/1 1 1 1

└─Machine/my-cluster-2zc9x 1 1 1 1 Ready: True Ready 48s

Cleanup

If you created a cluster using Cluster API, delete that first:

kubectl delete cluster my-cluster

Delete all BareMetalHosts with kubectl delete bmh <name>. This ensures that

the servers are cleaned and powered off.

Delete the management cluster.

kind delete cluster

Stop image server. It is automatically removed when stopped.

docker stop image-server

You may also want to delete the disk images:

rm -r disk-images

If you did the virtualized setup you will also need to cleanup the sushy-tools container and the VM(s).

#!/usr/bin/env bash

docker rm -f sushy-tools

virsh -c qemu:///system destroy --domain "bmh-vm-01"

virsh -c qemu:///system undefine --domain "bmh-vm-01" --remove-all-storage --nvram

# Clear network

virsh -c qemu:///system net-destroy baremetal-e2e

virsh -c qemu:///system net-undefine baremetal-e2e

sudo iptables -D FORWARD -i kind -o metal3 -j ACCEPT

sudo iptables -D FORWARD -i metal3 -o kind -j ACCEPT

sudo ip link delete metalend type veth

Baremetal provisioning

This is a guide to provision baremetal servers using the Metal3 project. It is a generic guide with basic implementation, different hardware may require different configuration.

In this guide we will use minikube as management cluster.

All commands are executed on the host where minikube is set up.

This is a separate machine, e.g. your laptop or one of the servers, that has access to the network where the servers are in order to provision them.

Install requirements on the host

Install following requirements on the host:

- Python

- Golang

- Docker for ubuntu and podman for Centos

- Ansible

See Install Ironic for other requirements.

Configure host

-

Create network settings. We are creating 2 bridge interfaces: provisioning and external. The provisioning interface is used by Ironic to provision the BareMetalHosts and the external interface allows them to communicate with each other and connect to internet.

# Create a veth interface peer. sudo ip link add ironicendpoint type veth peer name ironic-peer # Create provisioning bridge. sudo brctl addbr provisioning sudo ip addr add dev ironicendpoint 172.22.0.1/24 sudo brctl addif provisioning ironic-peer sudo ip link set ironicendpoint up sudo ip link set ironic-peer up # Create the external bridge sudo brctl addbr external sudo ip addr add dev external 192.168.111.1/24 sudo ip link set external up # Add udp forwarding to firewall, this allows to use ipmitool (port 623) # as well as allowing TFTP traffic outside the host (random port) iptables -A FORWARD -p udp -j ACCEPT # Add interface to provisioning bridge brctl addif provisioning eno1 # Set VLAN interface to be up ip link set up dev bmext # Check if bmext interface is added to the bridge brctl show baremetal | grep bmext # Add bmext to baremetal bridge brctl addif baremetal bmext

Prepare image cache

-

Start httpd container. This is used to host the the OS images that the BareMetalHosts will be provisioned with.

sudo docker run -d --net host --privileged --name httpd-infra -v /opt/metal3-dev-env/ironic:/shared --entrypoint /bin/runhttpd --envDownload the node image and put it in the folder where the httpd container can host it.

wget -O /opt/metal3-dev-env/ironic/html/images https://artifactory.nordix.org/artifactory/metal3/images/k8s_v1.33.0Convert the qcow2 image to raw format and get the hash of the raw image

# Change IMAGE_NAME and IMAGE_RAW_NAME according to what you download from artifactory cd /opt/metal3-dev-env/ironic/html/images IMAGE_NAME="CENTOS_9_NODE_IMAGE_K8S_v1.33.0.qcow2" IMAGE_RAW_NAME="CENTOS_9_NODE_IMAGE_K8S_v1.33.0-raw.img" qemu-img convert -O raw "${IMAGE_NAME}" "${IMAGE_RAW_NAME}" # Create sha256 hash sha256sum "${IMAGE_RAW_NAME}" | awk '{print $1}' > "${IMAGE_RAW_NAME}.sha256sum"

Launch management cluster using minikube

-

Create a minikube cluster to use as management cluster.

minikube start # Configuring ironicendpoint with minikube minikube ssh sudo brctl addbr ironicendpoint minikube ssh sudo ip link set ironicendpoint up minikube ssh sudo brctl addif ironicendpoint eth2 minikube ssh sudo ip addr add 172.22.0.9/24 dev ironicendpoint -

Initialize Cluster API and the Metal3 provider.

kubectl create namespace metal3 clusterctl init --core cluster-api --bootstrap kubeadm --control-plane kubeadm --infrastructure metal3 # NOTE: In clusterctl init you can change the version of provider like this 'cluster-api:v1.12.0', # if no version is given by default latest stable release will be used.

Install provisioning components

-

Exporting necessary variables for baremetal operator and Ironic deployment.

# The URL of the kernel to deploy. export DEPLOY_KERNEL_URL="http://172.22.0.1:6180/images/ironic-python-agent.kernel" # The URL of the ramdisk to deploy. export DEPLOY_RAMDISK_URL="http://172.22.0.1:6180/images/ironic-python-agent.initramfs" # The URL of the Ironic endpoint. export IRONIC_URL="http://172.22.0.1:6385/v1/" # The URL of the Ironic inspector endpoint - only before BMO 0.5.0. #export IRONIC_INSPECTOR_URL="http://172.22.0.1:5050/v1/" # Do not use a dedicated CA certificate for Ironic API. # Any value provided in this variable disables additional CA certificate validation. # To provide a CA certificate, leave this variable unset. # If unset, then IRONIC_CA_CERT_B64 must be set. export IRONIC_NO_CA_CERT=true # Disables basic authentication for Ironic API. # Any value provided in this variable disables authentication. # To enable authentication, leave this variable unset. # If unset, then IRONIC_USERNAME and IRONIC_PASSWORD must be set. #export IRONIC_NO_BASIC_AUTH=true # Disables basic authentication for Ironic inspector API (when used). # Any value provided in this variable disables authentication. # To enable authentication, leave this variable unset. # If unset, then IRONIC_INSPECTOR_USERNAME and IRONIC_INSPECTOR_PASSWORD must be set. #export IRONIC_INSPECTOR_NO_BASIC_AUTH=true -

Launch baremetal operator.

# Clone BMO repo git clone https://github.com/metal3-io/baremetal-operator.git # Run deploy.sh ./baremetal-operator/tools/deploy.sh -b -k -t -

Launch Ironic.

# Run deploy.sh ./baremetal-operator/tools/deploy.sh -i -k -t

Create Secrets and BareMetalHosts

Create yaml files for each BareMetalHost that will be used. Below is an example.

---

apiVersion: v1

kind: Secret

metadata:

name: <<secret_name_bmh1>>

type: Opaque

data:

username: <<username_bmh1>>

password: <<password_bmh1>>

---

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: <<id_bmh1>>

spec:

online: true

bootMACAddress: <<mac_address_bmh1>>

bootMode: legacy

bmc:

address: <<address_bmh1>> // this depends on the protocol that are mentioned above, they depend on hardware vendor

credentialsName: <<secret_name_bmh1>>

disableCertificateVerification: true

Apply the manifests.

kubectl apply -f ./bmh1.yaml -n metal3

At this point, the BareMetalHosts will go through registering and

inspection phases before they become available.

Wait for all of them to be available. You can check their status with

kubectl get bmh -n metal3.

The next step is to create a workload cluster from these BareMetalHosts.

Create and apply cluster, control plane and worker template

#API endpoint IP and port for target cluster

export CLUSTER_APIENDPOINT_HOST="192.168.111.249"

export CLUSTER_APIENDPOINT_PORT="6443"

# Export node image variable and node image hash variable that we created before.

# Change name according to what was downloaded from artifactory

export IMAGE_URL=http://172.22.0.1/images/CENTOS_9_NODE_IMAGE_K8S_v1.33.0-raw.img

export IMAGE_CHECKSUM=http://172.22.0.1/images/CENTOS_9_NODE_IMAGE_K8S_v1.33.0-raw.img.sha256sum

export IMAGE_CHECKSUM_TYPE=sha256

export IMAGE_FORMAT=raw

# Generate templates with clusterctl, change control plane and worker count according to

# the number of BareMetalHosts

clusterctl generate cluster capm3-cluster \

--kubernetes-version v1.33.0 \

--control-plane-machine-count=3 \

--worker-machine-count=3 \

> capm3-cluster-template.yaml

# Apply the template

kubectl apply -f capm3-cluster-template.yaml

Bare Metal Operator

The Bare Metal Operator (BMO) is a Kubernetes controller that manages

bare-metal hosts, represented in Kubernetes by BareMetalHost (BMH) custom

resources.

BMO is responsible for the following operations:

- Inspecting the host’s hardware and reporting the details on the corresponding BareMetalHost. This includes information about CPUs, RAM, disks, NICs, and more.

- Optionally preparing the host by configuring RAID, changing firmware settings or updating the system and/or BMC firmware.

- Provisioning the host with a desired image.

- Cleaning the host’s disk contents before and after provisioning.

Under the hood, BMO uses Ironic to conduct these actions.

Enrolling BareMetalHosts

To enroll a bare-metal machine as a BareMetalHost, you need to know at least

the following properties:

- The IP address and credentials of the BMC - the remote management controller of the host.

- The protocol that the BMC understands. Most common are IPMI and Redfish. See supported hardware for more details.

- Boot technology that can be used with the host and the chosen protocol. Most hardware can use network booting, but some Redfish implementations also support virtual media (CD) boot.

- MAC address that is used for booting. Important: it’s a MAC address of an actual NIC of the host, not the BMC MAC address.

- The desired boot mode: UEFI or legacy BIOS. UEFI is the default and should be used unless there are serious reasons not to.

This is a minimal example of a valid BareMetalHost:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: node-0

namespace: metal3

spec:

bmc:

address: ipmi://192.168.111.1:6230

credentialsName: node-0-bmc-secret

bootMACAddress: 00:5a:91:3f:9a:bd

online: true

When this resource is created, it will undergo inspection that will populate

more fields as part of the status.

Deploying BareMetalHosts

To provision a bare-metal machine, you will need a few more properties:

- The URL and checksum of the image. Images should be in QCOW2 or raw format. It is common to use various cloud images with BMO, e.g. Ubuntu or CentOS. Important: not all images are compatible with UEFI boot - check their description.

- Optionally, user data: a secret with a configuration or a script that is interpreted by the first-boot service embedded in your image. The most common service is cloud-init, some distributions use ignition.

- Optionally, network data: a secret with the network configuration that is interpreted by the first-boot service. In some cases, the network data is embedded in the user data instead.

Here is a complete example of a host that will be provisioned with a CentOS 9 image:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: node-0

namespace: metal3

spec:

bmc:

address: ipmi://192.168.111.1:6230

credentialsName: node-0-bmc-secret

bootMACAddress: 00:5a:91:3f:9a:bd

image:

checksum: http://172.22.0.1/images/CENTOS_9_NODE_IMAGE_K8S_v1.33.0.qcow2.sha256sum

url: http://172.22.0.1/images/CENTOS_9_NODE_IMAGE_K8S_v1.33.0.qcow2

networkData:

name: test1-workers-tbwnz-networkdata

namespace: metal3

online: true

userData:

name: test1-workers-vd4gj

namespace: metal3

status:

hardware:

cpu:

arch: x86_64

count: 2

hostname: node-0

nics:

- ip: 172.22.0.73

mac: 00:5a:91:3f:9a:bd

name: enp1s0

ramMebibytes: 4096

storage:

- hctl: "0:0:0:0"

name: /dev/sda

serialNumber: drive-scsi0-0-0-0

sizeBytes: 53687091200

type: HDD

Integration with the cluster API

CAPM3 is the Metal3 component that is responsible for

integration between Cluster API resources and BareMetalHosts. When using Metal3

with CAPM3, you will enroll BareMetalHosts as described above first, then use

Metal3MachineTemplate to describe how hosts should be deployed, i.e. which

images and user data to use.

This happens for example when the user scales a MachineDeployment so that the server should be added to the cluster, or during an upgrade when it must change the image it is booting from:

Install Baremetal Operator

Installing Baremetal Operator (BMO) involves usually three steps:

- Clone Metal3 BMO repository

https://github.com/metal3-io/baremetal-operator.git. - Adapt the configuration settings to your specific needs.

- Deploy BMO in the cluster with or without Ironic.

Note: This guide assumes that a local clone of the repository is available.

Configuration Settings

Review and edit the file ironic.env found in config/default.

The operator supports several configuration options for controlling

its interaction with Ironic.

DEPLOY_RAMDISK_URL – The URL for the ramdisk of the image

containing the Ironic agent.

DEPLOY_KERNEL_URL – The URL for the kernel to go with the deploy

ramdisk.

DEPLOY_ISO_URL – The URL for the ISO containing the Ironic agent for

drivers that support ISO boot. Optional if kernel/ramdisk are set.

IRONIC_ENDPOINT – The URL for the operator to use when talking to

Ironic.

IRONIC_CACERT_FILE – The path of the CA certificate file of Ironic, if needed

IRONIC_INSECURE – (“True”, “False”) Whether to skip the ironic certificate

validation. It is highly recommend to not set it to True.

IRONIC_CLIENT_CERT_FILE – The path of the Client certificate file of Ironic,

if needed. Both Client certificate and Client private key must be defined for

client certificate authentication (mTLS) to be enabled.

IRONIC_CLIENT_PRIVATE_KEY_FILE – The path of the Client private key file of Ironic,

if needed. Both Client certificate and Client private key must be defined for

client certificate authentication (mTLS) to be enabled.

IRONIC_SKIP_CLIENT_SAN_VERIFY – (“True”, “False”) Whether to skip the ironic

client certificate SAN validation.

BMO_CONCURRENCY – The number of concurrent reconciles performed by the

Operator. Default is the number of CPUs, but no less than 2 and no more than 8.

PROVISIONING_LIMIT – The desired maximum number of hosts that could be (de)provisioned

simultaneously by the Operator. The limit does not apply to hosts that use

virtual media for provisioning. The Operator will try to enforce this limit,

but overflows could happen in case of slow provisioners and / or higher number of

concurrent reconciles. For such reasons, it is highly recommended to keep

BMO_CONCURRENCY value lower than the requested PROVISIONING_LIMIT. Default is 20.

IRONIC_EXTERNAL_URL_V6 – This is the URL where Ironic will find the image

for nodes that use IPv6. In dual stack environments, this can be used to tell

Ironic which IP version it should set on the BMC.

Deprecated options

IRONIC_INSPECTOR_ENDPOINT – The URL for the operator to use when talking to

Ironic Inspector. Only supported before baremetal-operator 0.5.0.

Kustomization Configuration

It is possible to deploy baremetal-operator with three different operator

configurations, namely:

- operator with ironic

- operator without ironic

- ironic without operator

A detailed overview of the configuration is presented in the following sections.

Notes on external Ironic

When an external Ironic is used, the following requirements must be met:

-

Either HTTP basic or no-auth authentication must be used (Keystone is not supported).

-

API version 1.74 (Xena release cycle) or newer must be available.

Authenticating to Ironic

Because hosts under the control of Metal³ need to contact the Ironic API during inspection and provisioning, it is highly advisable to require authentication on those APIs, since the provisioned hosts running user workloads will remain connected to the provisioning network.

Configuration

The baremetal-operator supports connecting to Ironic with the following

auth_strategy modes:

noauth(no authentication - not recommended)http_basic(HTTP Basic access authentication)

Note that Keystone (OpenStack Identity) authentication methods are not yet supported.

Authentication configuration is read from the filesystem, beginning at the root

directory specified in the environment variable METAL3_AUTH_ROOT_DIR. If this

variable is empty or not specified, the default is /opt/metal3/auth.

Within the root directory, there is a separate subdirectory ironic for

Ironic client configuration.

noauth

This is the default, and will be chosen if the auth root directory does not exist. In this mode, the baremetal-operator does not attempt to do any authentication against the Ironic APIs.

http_basic

This mode is configured by files in each authentication subdirectory named

username and password, and containing the Basic auth username and password,

respectively.

Running Bare Metal Operator with or without Ironic

This section explains the deployment scenarios of deploying Bare Metal Operator(BMO) with or without Ironic as well as deploying only Ironic scenario.

These are the deployment use cases addressed:

-

Deploying baremetal-operator with Ironic.

-

Deploying baremetal-operator without Ironic.

-

Deploying only Ironic.

Current structure of baremetal-operator config directory

tree config/

config/

├── basic-auth

│ ├── default

│ │ ├── credentials_patch.yaml

│ │ └── kustomization.yaml

│ └── tls

│ ├── credentials_patch.yaml

│ └── kustomization.yaml

├── certmanager

│ ├── certificate.yaml

│ ├── kustomization.yaml

│ └── kustomizeconfig.yaml

├── crd

│ ├── bases

│ │ ├── metal3.io_baremetalhosts.yaml

│ │ ├── metal3.io_firmwareschemas.yaml

│ │ └── metal3.io_hostfirmwaresettings.yaml

│ ├── kustomization.yaml

│ ├── kustomizeconfig.yaml

│ └── patches

│ ├── cainjection_in_baremetalhosts.yaml

│ ├── cainjection_in_firmwareschemas.yaml

│ ├── cainjection_in_hostfirmwaresettings.yaml

│ ├── webhook_in_baremetalhosts.yaml

│ ├── webhook_in_firmwareschemas.yaml

│ └── webhook_in_hostfirmwaresettings.yaml

├── default

│ ├── ironic.env

│ ├── kustomization.yaml

│ ├── manager_auth_proxy_patch.yaml

│ ├── manager_webhook_patch.yaml

│ └── webhookcainjection_patch.yaml

├── kustomization.yaml

├── manager

│ ├── kustomization.yaml

│ └── manager.yaml

├── namespace

│ ├── kustomization.yaml

│ └── namespace.yaml

├── OWNERS

├── prometheus

│ ├── kustomization.yaml

│ └── monitor.yaml

├── rbac

│ ├── auth_proxy_client_clusterrole.yaml

│ ├── auth_proxy_role_binding.yaml

│ ├── auth_proxy_role.yaml

│ ├── auth_proxy_service.yaml

│ ├── baremetalhost_editor_role.yaml

│ ├── baremetalhost_viewer_role.yaml

│ ├── firmwareschema_editor_role.yaml

│ ├── firmwareschema_viewer_role.yaml

│ ├── hostfirmwaresettings_editor_role.yaml

│ ├── hostfirmwaresettings_viewer_role.yaml

│ ├── kustomization.yaml

│ ├── leader_election_role_binding.yaml

│ ├── leader_election_role.yaml

│ ├── role_binding.yaml

│ └── role.yaml

├── render

│ └── capm3.yaml

├── samples

│ ├── metal3.io_v1alpha1_baremetalhost.yaml

│ ├── metal3.io_v1alpha1_firmwareschema.yaml

│ └── metal3.io_v1alpha1_hostfirmwaresettings.yaml

├── tls

│ ├── kustomization.yaml

│ └── tls_ca_patch.yaml

└── webhook

├── kustomization.yaml

├── kustomizeconfig.yaml

├── manifests.yaml

└── service_patch.yaml

The config directory has one top level folder for deployment, namely default

and it deploys only baremetal-operator through kustomization file calling

manager folder. In addition, basic-auth, certmanager, crd, namespace,

prometheus, rbac, tls and webhook folders have their own kustomization

and yaml files. samples folder includes yaml representation of sample CRDs.

Current structure of ironic-deployment directory

tree ironic-deployment/

ironic-deployment/

├── base

│ ├── ironic.yaml

│ └── kustomization.yaml

├── components

│ ├── basic-auth

│ │ ├── auth.yaml

│ │ ├── ironic-auth-config

│ │ ├── ironic-auth-config-tpl

│ │ ├── ironic-htpasswd

│ │ └── kustomization.yaml

│ ├── keepalived

│ │ ├── ironic_bmo_configmap.env

│ │ ├── keepalived_patch.yaml

│ │ └── kustomization.yaml

│ └── tls

│ ├── certificate.yaml

│ ├── kustomization.yaml

│ ├── kustomizeconfig.yaml

│ └── tls.yaml

├── default

│ ├── ironic_bmo_configmap.env

│ └── kustomization.yaml

├── overlays

│ ├── basic-auth_tls

│ │ ├── basic-auth_tls.yaml

│ │ └── kustomization.yaml

│ └── basic-auth_tls_keepalived

│ └── kustomization.yaml

├── OWNERS

└── README.md

The ironic-deployment folder contains kustomizations for deploying Ironic.

It makes use of kustomize components for basic auth, TLS and keepalived configurations.

This makes it easy to combine the configurations, for example basic auth + TLS.

There are some ready made overlays in the overlays folder that shows how this

can be done. For more information, check the readme in the ironic-deployment

folder.

Deployment commands

There is a useful deployment script that configures and deploys BareMetal Operator and Ironic. It requires some variables :

- IRONIC_HOST : domain name for Ironic

- IRONIC_HOST_IP : IP on which Ironic is listening

In addition you can configure the following variables. They are optional. If you leave them unset, then passwords and certificates will be generated for you.

- KUBECTL_ARGS : Additional arguments to kubectl apply

- IRONIC_USERNAME : username for ironic

- IRONIC_PASSWORD : password for ironic

- IRONIC_CACERT_FILE : CA certificate path for ironic

- IRONIC_CAKEY_FILE : CA certificate key path, unneeded if ironic

- certificates exist

- IRONIC_CERT_FILE : Ironic certificate path

- IRONIC_KEY_FILE : Ironic certificate key path

- MARIADB_KEY_FILE: Path to the key of MariaDB

- MARIADB_CERT_FILE: Path to the cert of MariaDB

- MARIADB_CAKEY_FILE: Path to the CA key of MariaDB

- MARIADB_CACERT_FILE: Path to the CA certificate of MariaDB

Before version 0.5.0, Ironic Inspector parameters were also used:

- IRONIC_INSPECTOR_USERNAME : username for inspector

- IRONIC_INSPECTOR_PASSWORD : password for inspector

- IRONIC_INSPECTOR_CERT_FILE : Inspector certificate path

- IRONIC_INSPECTOR_KEY_FILE : Inspector certificate key path

- IRONIC_INSPECTOR_CACERT_FILE : CA certificate path for inspector, defaults to IRONIC_CACERT_FILE

- IRONIC_INSPECTOR_CAKEY_FILE : CA certificate key path, unneeded if inspector certificates exist

Then run :

./tools/deploy.sh [-b -i -t -n -k]

-b: deploy BMO-i: deploy Ironic-t: deploy with TLS enabled-n: deploy without authentication-k: deploy with keepalived

This will deploy BMO and / or Ironic with the proper configuration.

Useful tips

It is worth mentioning some tips for when the different configurations are useful as well. For example:

-

Only BMO is deployed, in a case when Ironic is already running, e.g. as part of Cluster API Provider Metal3 (CAPM3) when a successful pivoting state was met and ironic being deployed.

-

BMO and Ironic are deployed together, in a case when CAPM3 is not used and baremetal-operator and ironic containers to be deployed together.

-

Only Ironic is deployed, in a case when BMO is deployed as part of CAPM3 and only Ironic setup is sufficient, e.g. clusterctl provided by Cluster API(CAPI) deploys BMO, so that it can take care of moving the BaremetalHost during the pivoting.

Important Note When the baremetal-operator is deployed through metal3-dev-env, baremetal-operator container inherits the following environment variables through configmap:

$PROVISIONING_IP

$PROVISIONING_INTERFACE

In case you are deploying baremetal-operator locally, make sure to populate and export these environment variables before deploying.

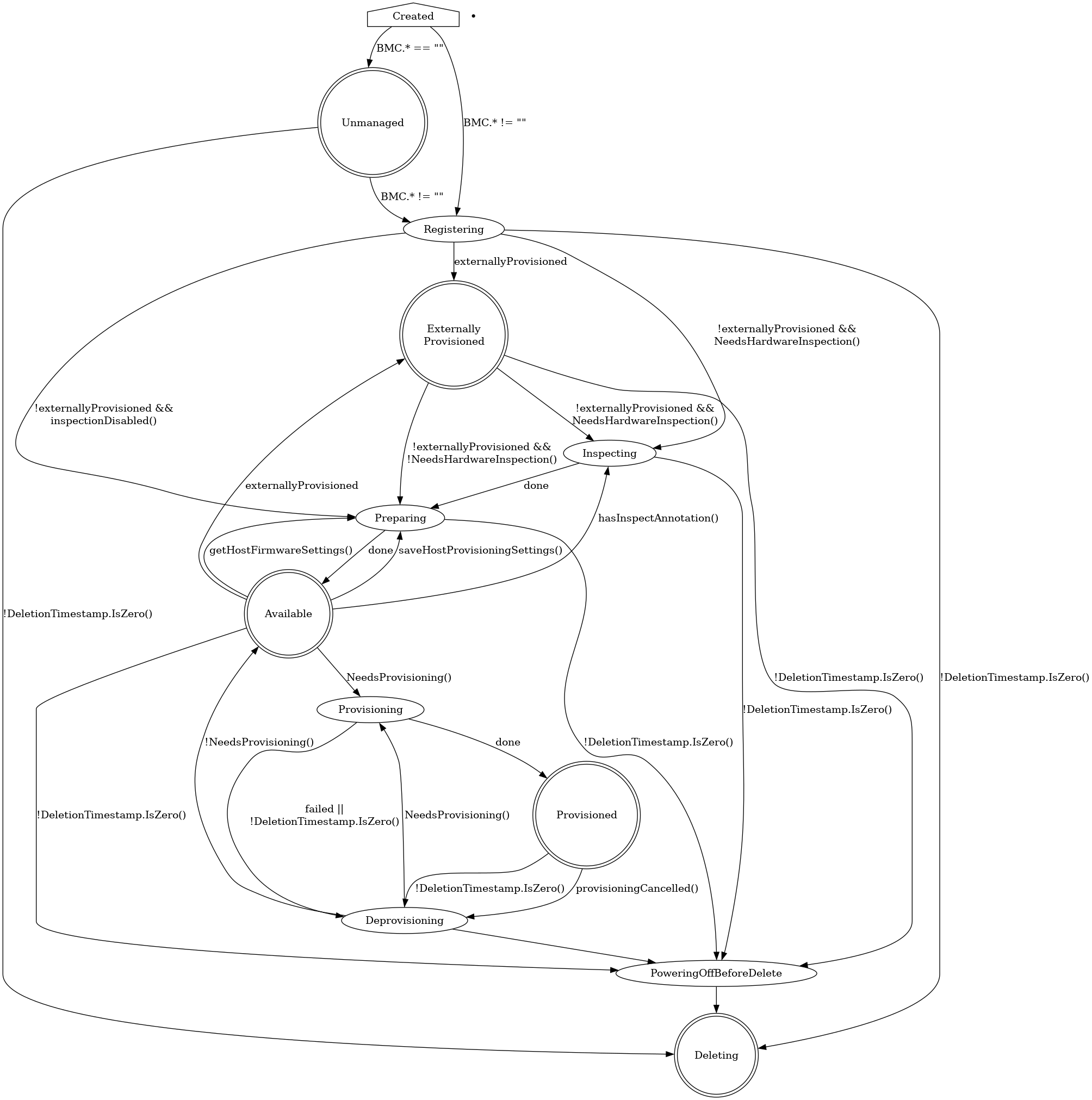

Host State Machine

During its lifetime, a BareMetalHost resource goes through a series of

various states. Some of them are stable (the host stays in them indefinitely

without user input), some are transient (the state will change once a certain

operation completes). These fields in the status resource define the current

state of the host:

status.provisioning.state– the current phase of the provisioning process.status.operationHistory– the history of the main provisioning phases: registration, inspection, provisioning and deprovisioning.status.operationalStatus– the overall status of the host.status.errorType– the type of the current error (if any).status.poweredOn– the current power state of the host.

This is how the status of a healthy provisioned host may look like:

status:

# ...

operationHistory:

deprovision:

end: null

start: null

inspect:

end: "2024-06-17T13:09:07Z"

start: "2024-06-17T13:03:54Z"

provision:

end: "2024-06-17T13:11:18Z"

start: "2024-06-17T13:09:26Z"

register:

end: "2024-06-17T13:03:54Z"

start: "2024-06-17T12:54:18Z"

operationalStatus: OK

poweredOn: true

provisioning:

ID: e09032ea-1b7d-4c50-bfcd-b94ff7e8d431

bootMode: UEFI

image:

checksumType: sha256

checksum: http://192.168.0.150/SHA256SUMS

format: qcow2

url: http://192.168.0.150/jammy-server-cloudimg-amd64.img

rootDeviceHints:

deviceName: /dev/sda

state: provisioned

# ...

OperationalStatus

OK– the host is healthy and operational.discovered– the host is known to Metal3 but lacks the required information for the normal operation (usually, the BMC credentials).error– error has occurred, see thestatus.errorTypeandstatus.errorMessagefields for details.delayed– cannot proceed with the provisioning because the maximum number of the hosts in the given state has been reached.detached– the host is detached, no provisioning actions are possible (see detached annotation for details).

Provisioning state machine

Provisioning states

Creating

Newly created hosts get an empty provisioning state briefly before moving

either to unmanaged or registering.

Unmanaged

An unmanaged host is missing both the BMC address and credentials

secret name, and does not have any information to access the BMC

for registration.

The corresponding operational status is discovered.

Externally Provisioned

An externally provisioned host has been deployed

using another tool. Hosts reach this state when they are created with the

externallyProvisioned field set to true. Hosts in this state are monitored,

and only their power status is managed.

Registering

The host will stay in the registering state while the BMC access details are

being validated.

Inspecting

After the host is registered, an IPA ramdisk will be booted on it. The agent

collects information about the available hardware components and sends it back

to Metal3. The host will stay in the inspecting state until this process is

completed.

Preparing

When setting up RAID or changing firmware settings, the host will be in

preparing state.

Available

A host in the available state is ready to be provisioned. It will move to the

provisioning state once the image field is populated.

Provisioning

While an image is being copied to the host, and the host is configured

to run the image, the host will be in the provisioning state.

Provisioned

After an image is copied to the host and the host is running the

image, it will be in the provisioned state.

Deprovisioning

When the previously provisioned image is being removed from the host,

it will be in the deprovisioning state.

Powering off before delete

When the host that is not currently unmanaged is marked to be deleted, it

will be powered off first and will stay in the powering off before delete

until it’s done or until the retry limit is reached.

Deleting

When the host is marked to be deleted and has been successfully powered off, it

will move from its current state to deleting, at which point the resource

record is deleted.

Supported hardware

Metal3 supports many vendors and models of enterprise-grade hardware with a BMC (Baseboard Management Controller) that supports one of the remote management protocols described in this document. On top of that, one of the two boot methods must be supported:

-

Network boot. Most hardware supports booting a Linux kernel and initramfs via TFTP. Metal3 augments it with iPXE - a higher level network boot firmware with support for scripting and TCP-based protocols such as HTTP.

Booting over network relies on DHCP and thus requires a provisioning network for isolated L2 traffic between the Metal3 control plane and the machines.

-

Virtual media boot. Some hardware model support directly booting an ISO 9660 image as a virtual CD device over HTTP(s). An important benefit of this approach is the ability to boot hardware over L3 networks, potentially without DHCP at all.

IPMI

IPMI is the oldest and by far the most widely available remote management protocol. Nearly all enterprise-grade hardware supports it. Its downside include reduced reliability and a weak security, especially if not configured properly.

WARNING: only network boot over iPXE is supported for IPMI.

| BMC address format | Notes |

|---|---|

ipmi://<host>:<port> | Port is optional, defaults to 623. |

<host>:<port> | IPMI is the default protocol in Metal3. |

Redfish and its variants

Redfish is a vendor-agnostic protocol for remote hardware management. It is based on HTTP(s) and JSON and thus does not suffer from the limitations of IPMI. It also exposes modern features such as virtual media boot, RAID management, firmware settings and updates.

Ironic (and thus Metal3) aims to support Redfish as closely to the standard as possible, with a few workarounds for known issues and explicit support for Dell iDRAC. Note, however, that all features are optional in Redfish, so you may encounter a Redfish-capable hardware that is not supported by Metal3. Furthermore, some features (such as virtual media boot) may require buying an additional license to function.

Since a Redfish API endpoint can manage several servers (systems in Redfish

terminology), BMC addresses for Redfish-based drivers include a system ID -

the URL of the particular server. For Dell machines it usually looks like

/redfish/v1/Systems/System.Embedded.1, while other vendors may simply use

/redfish/v1/Systems/1. Check the hardware documentation to find out which

format is right for your machine.

| Technology | Boot method | BMC address format | Notes |

|---|---|---|---|

| Generic Redfish | iPXE | redfish://<host>:<port>/<systemID> | |

| Virtual media | redfish-virtualmedia://<host>:<port>/<systemID> | Must not be used for Dell machines. | |

| Dell iDRAC 8+ | iPXE | idrac-redfish://<host>:<port>/<systemID> | |

| Virtual media | idrac-virtualmedia://<host>:<port>/<systemID> | Requires firmware v6.10.30.00+ for iDRAC 9, v2.75.75.75+ for iDRAC 8. | |

| HPE iLO 5 and 6 | iPXE | ilo5-redfish://<host>:<port>/<systemID> | An alias of redfish for convenience. RAID management only on iLO 6. |

| Virtual media | ilo5-virtualmedia://<host>:<port>/<systemID> | An alias of redfish for convenience. RAID management only on iLO 6. |

Users have also reported success with certain models of SuperMicro, Lenovo, ZT Systems and Cisco UCS hardware, but hardware from these vendors is not regularly tested by the team.

All drivers based on Redfish allow optionally specifying the carrier protocol

in the form of +http or +https, for example: redfish+http://... or

idrac-virtualmedia+https. When not specified, HTTPS is used by default.

Redfish interoperability

As noted above, Redfish allows for very different valid implementations, some of which are not compatible with Ironic (and thus Metal3). The Ironic project publishes a Redfish interoperability profile – a JSON document that describes the required and optionally supported Redfish API features. Its available versions can be found in the Ironic source tree. The Redfish-Interop-Validator tool can be used to validate a server against this profile.

Check the Ironic interoperability documentation for a rendered version of the latest profile. All features required for Ironic are also required for Metal3. Most optional features except for the out-of-band inspection are also supported, although the hardware metrics support via ironic-prometheus-exporter is currently experimental and undocumented.

Vendor-specific protocols

| Technology | Protocol | Boot method | BMC address format | Notes |

|---|---|---|---|---|

| Fujitsu iRMC | iRMC | iPXE | irmc://<host>:<port> | Deprecated, to be removed after BMO 0.12. |

| HPE iLO 4 | iLO | iPXE | ilo4://<host>:<port> | Removed after BMO 0.11 / Ironic 32.0. |

| iLO | Virtual media | ilo4-virtualmedia://<host>:<port> | Removed after BMO 0.11 / Ironic 32.0. | |

| HPE iLO 5 | iLO | iPXE | ilo5://<host>:<port> | Removed after BMO 0.11 / Ironic 32.0. |

Baremetal Operator features

Basic features

- Provisioning and Deprovisioning

- Automated Cleaning

- Automatic Secure Boot

- Controlling Inspection

- Firmware Settings

- Firmware Updates

- Instance Customization

- RAID Setup

- Rebooting Hosts

- Specifying Root Device

Provisioning and Deprovisioning

The most fundamental feature of Metal3 Bare Metal Operator is provisioning of

bare-metal machines with a user-provided image. This document explains how to

provision machines using the BareMetalHost API directly. Users of the Cluster

API should consult the CAPM3 documentation instead.

Provisioning

A freshly enrolled host gets provisioned when the two conditions are met:

- the state is

available(see state machine), - either its

imagefield or itscustomDeployfield is not empty.

NOTE: customDeploy is an advanced feature that is not covered in this

document.

To start the provisioning process, you need at least two bits of information:

- the URL of the image you want to put on the target host,

- the value or the URL of the image checksum using either SHA256 or SHA512 (MD5 is supported but deprecated and not compatible with FIPS 140 mode).

The minimum example looks like this:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: host-0

namespace: my-cluster

spec:

online: true

bootMACAddress: 80:c1:6e:7a:e8:10

bmc:

address: ipmi://192.168.1.13

credentialsName: host-0-bmc

image:

checksum: http://192.168.0.150/SHA256SUMS

url: http://192.168.0.150/jammy-server-cloudimg-amd64.img

checksumType: auto

In most real cases, you will also want to provide

- first-boot configuration as described in instance customization,

- hints to choose the target root device,

- the format of the image you use.

As a result, a more complete example will look like this:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: host-0

namespace: my-cluster

spec:

online: true

bootMACAddress: 80:c1:6e:7a:e8:10

bmc:

address: ipmi://192.168.1.13

credentialsName: host-0-bmc

image:

checksum: http://192.168.0.150/SHA256SUMS

url: http://192.168.0.150/jammy-server-cloudimg-amd64.img

checksumType: auto

format: raw

rootDeviceHints:

wwn: "0x55cd2e415652abcd"

userData:

name: host-0-userdata

When the provisioning state of the host becomes provisioned, your instance is

ready to use. Note, however, that booting the operating system and applying the

first boot scripts will take a few more minutes after that.

Note on images

Two image formats are commonly used with Metal3: QEMU’s qcow2 and raw disk images. Both formats have their upsides and downsides:

-

Qcow images are usually smaller and thus require less network bandwidth to transfer, especially if you provision many machines with different images at the same time.

-

Raw images can be streamed directly from the remote location to the target block device without any conversion. However, they can be very large.

When the format is omitted, Ironic will download the image into the local cache

and inspect its format. If you want to use the streaming feature, you need to

provide the raw format explicitly. If you want to forcibly cache the image

(for example, because the remote image server is not accessible from the

machine being provisioned), omit the format or use qcow2 images.

HINT: cloud-init is capable of growing the last partition to occupy the remaining free space. Use this feature instead of creating very large raw images with a lot of empty space.

NOTE: the special format value live-iso triggers a live ISO

provisioning that works differently from a normal one.

Notes on checksums

Starting from BMO v0.10.0, leaving checksumType empty prompts Ironic to

automatically detect the checksum type based on its length. In earlier versions,

this behavior can be achieved by setting checksumType to auto.

The checksum value can be provided either as a URL or as the hash value

directly. Providing a URL is more convenient in case of public cloud images,

but it provides a weaker defense against man-in-the-middle attacks.

Deprovisioning

To remove an instance from the host and make it available for new deployments,

remove the image, userData, networkData, metaData and customDeploy

fields (if present). Depending on the host configuration, it will either start

the automated cleaning process or will become

available right away.

Reprovisioning

If you want to apply a new image or new user or network data to the host, you need to deprovision and provision it again. This can be done in two ways:

-

If the URL of the image changes, the re-provisioning process will start automatically. Make sure to update the user and network data in the same or earlier edit operation.

-

If the URL of the image is the same, you need to remove the

imagefield, then add it back once the state of theBareMetalHostchanges todeprovisioning.

WARNING: updating the userData and networkData fields alone does not

trigger a new provisioning.

Automated Cleaning

One of the Ironic’s feature exposed to Metal3 Baremetal Operator is node automated cleaning. When enabled, automated cleaning kicks off when a node is provisioned first time and on every deprovisioning.

There are two automated cleaning modes available which can be configured via

automatedCleaningMode field of a BareMetalHost spec:

metadata(the default) enables the removal of partitioning tables from all disksdisableddisables the cleaning process

For example:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: example-host

spec:

automatedCleaningMode: metadata

bootMACAddress: 00:8a:b6:8e:ac:b8

bmc:

address: ipmi://192.168.111.1:6230

credentialsName: example-node-bmc-secret

online: true

Note: Ironic supports full data removal, which is not currently exposed in Metal3.

For a host with cleaning disabled, no cleaning will be performed during deprovisioning. This is faster but may cause conflicts on subsequent provisionings (e.g. Ceph is known not to tolerate stale data partitions).

Warning: when disabling cleaning, consider setting root device hints to specify the exact block device to install to. Otherwise, subsequent provisionings may end up with different root devices, potentially causing incorrect configuration because of duplicated config drives.

If you are using Cluster-api-provider-metal3, please see its cleaning documentation.

Automatic secure boot

The automatic secure boot feature allows enabling and disabling UEFI (Unified

Extensible Firmware Interface) secure boot when provisioning a host. This

feature requires supported hardware and compatible OS image. The current

hardwares that support enabling UEFI secure boot are iLO, iRMC and

Redfish drivers.

Check also:

Why do we need it

We need the Automatic secure boot when provisioning a host with high security requirements. Based on checksum and signature, the secure boot protects the host from loading malicious code in the boot process before loading the provisioned operating system.

How to use it

To enable Automatic secure boot, first check if hardware is supported and then

specify the value UEFISecureBoot for bootMode in the BareMetalHost custom

resource. Please note, it is enabled before booting into the deployed instance

and disabled when the ramdisk is running and on tear down. Below you can check

the example:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: node-1

spec:

online: true

bootMACAddress: 00:5c:52:31:3a:9c

bootMode: UEFISecureBoot

...

This will enable UEFI before booting the instance and disable it when

deprovisioned. Note that the default value for bootMode is UEFI.

Controlling Inspection

Re-running inspection

The inspect annotation can be used to request the BareMetal Operator to

(re-)inspect an available BareMetalHost, for example, when the hardware

changes. If an inspection request is made while the host is any other

state than available, the request will be ignored.

To request a new inspection, simply annotate the host with inspect.metal3.io.

Once inspection is requested, you should see the BMH in inspecting state

until inspection is completed, and by the end of inspection the

inspect.metal3.io annotation will be removed automatically.

Here is an example:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: example

annotations:

# The inspect annotation with no value

inspect.metal3.io: ""

spec:

...

Disabling inspection

If you do not need the HardwareData collected by inspection, you can disable it

by setting the inspect.metal3.io annotation to disabled, for example:

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: example

annotations:

inspect.metal3.io: disabled

spec:

...

For advanced use cases, such as providing externally gathered inspection data, see external inspection.

Firmware Settings

Metal3 supports modifying firmware settings of the hosts before provisioning them. This feature can be used, for example, to enable or disable CPU virtualization extensions, huge pages or SRIOV support. The corresponding functionality in Ironic is called BIOS settings.

Reading and modifying firmware settings is only supported for drivers based on Redfish, iRMC or iLO (see supported hardware). The commonly used IPMI driver does not support this feature.

HostFirmwareSettings Resources

A HostFirmwareSettings resource is automatically created for each host that

supports firmware settings with the same name and in the same namespace as

host. BareMetal Operator puts the current settings in the status.settings

field:

apiVersion: metal3.io/v1alpha1

kind: HostFirmwareSettings

metadata:

creationTimestamp: "2024-05-28T16:31:06Z"

generation: 1

name: worker-0

namespace: my-cluster

ownerReferences:

- apiVersion: metal3.io/v1alpha1

blockOwnerDeletion: true

controller: true

kind: BareMetalHost

name: worker-0

uid: 663a1453-d4d8-43a3-b459-64ea94d1435f

resourceVersion: "20653"

uid: 46fc9ccb-0717-4ced-93aa-babbe1a8cd5b

spec:

settings: {}

status:

conditions:

- lastTransitionTime: "2024-05-28T16:31:06Z"

message: ""

observedGeneration: 1

reason: Success

status: "True"

type: Valid

- lastTransitionTime: "2024-05-28T16:31:06Z"

message: ""

observedGeneration: 1

reason: Success

status: "False"

type: ChangeDetected

lastUpdated: "2024-05-28T16:31:06Z"

schema:

name: schema-f229959d

namespace: my-cluster

settings:

BootMode: Uefi

EmbeddedSata: Raid

L2Cache: 10x256 KB

NicBoot1: NetworkBoot

NumCores: "10"

ProcTurboMode: Enabled

QuietBoot: "true"

SecureBootStatus: Enabled

SerialNumber: QPX12345

In this example (taken from a virtual testing environment):

-

The

spec.settingsmapping is empty - no change is requested by the user. -

The

status.settingsmapping is populated with the current values detected by Ironic. -

The

Validcondition isTrue, which means thatspec.settingsare valid according to the host’sFirmwareSchema. The condition will be set toFalseif any value inspec.settingsfails validation. -

The

ChangeDetectedcondition isFalse, which means that the desired settings and the real settings do not diverge. This condition will be set toTrueafter you modifyspec.settingsuntil the change is reflected instatus.settings. -

The

schemafield contains a link to the firmware schema (see below).

Warning: Ironic does not constantly update the current settings to avoid an unnecessary load on the host’s BMC. The current settings are updated on enrollment, provisioning and deprovisioning only.

FirmwareSchema resources

One or more FirmwareSchema resources are created for hosts that support

firmware settings. Each schema object represents a list of possible settings

and limits on their values.

apiVersion: metal3.io/v1alpha1

kind: FirmwareSchema

metadata:

creationTimestamp: "2024-05-28T16:31:06Z"

generation: 1

name: schema-f229959d

namespace: my-cluster

ownerReferences:

- apiVersion: metal3.io/v1alpha1

kind: HostFirmwareSettings

name: worker-1

uid: bd97a81c-c736-4a6d-aee5-32dccb26e366

- apiVersion: metal3.io/v1alpha1

kind: HostFirmwareSettings

name: worker-0

uid: d8fb3c8a-395e-4c0a-9171-5928a68305b3

spec:

hardwareModel: KVM (8.6.0)

hardwareVendor: Red Hat

schema:

BootMode:

allowable_values:

- Bios

- Uefi

attribute_type: Enumeration

read_only: false

NumCores:

attribute_type: Integer

lower_bound: 10

read_only: true

unique: false

upper_bound: 20

QuietBoot:

attribute_type: Boolean

read_only: false

unique: false

The following fields are included for each setting:

attribute_type– The type of the setting (Enumeration,Integer,String,Boolean, orPassword).read_only– The setting is read-only and cannot be modified.unique– The setting’s value is unique in this host (e.g. serial numbers).

For type Enumeration:

allowable_values– A list of allowable values.

For type Integer:

lower_bound– The lowest allowed integer value.upper_bound– The highest allowed integer value.

For type String:

min_length– The minimum length that the string value can have.max_length– The maximum length that the string value can have.

Note: the FirmwareSchema has a unique identifier derived from its

settings and limits. Multiple hosts may therefore have the same

FirmwareSchema identifier so its likely that more than one

HostFirmwareSettings reference the same FirmwareSchema when hardware of the

same vendor and model are used.

How to change firmware settings

To change one or more settings for a host, update the corresponding

HostFirmwareSettings resource, changing or adding the required settings to

spec.settings. For example:

apiVersion: metal3.io/v1alpha1

kind: HostFirmwareSettings

metadata:

name: worker-0

namespace: my-cluster

# ...

spec:

settings:

QuietBoot: true

status:

# ...

Hint: you don’t need to copy over the settings you don’t want to change.

If the host is in the available state, it will be moved to the preparing

state and the new settings will be applied. After some time, the host will move

back to available, and the resulting changes will be reflected in the

status of the HostFirmwareSettings object. Applying firmware settings